From the faint hum of a tuning fork to the booming bass of a concert hall, audio signals shape the way we experience sound every day. But what exactly are audio signals, and why do they matter so much in music, communication, and technology? Let’s dive into the fascinating world of frequencies, waveforms, and the technology that brings them to life.

Understanding Audio Signals

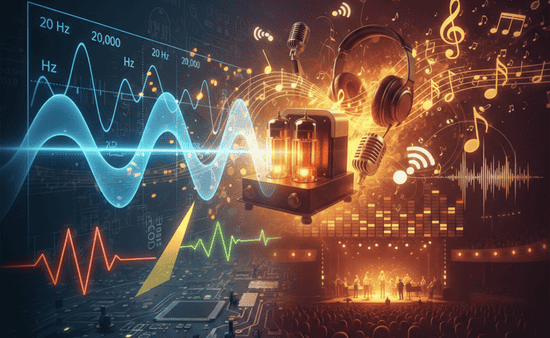

At its core, an audio signal is a representation of sound, usually in the form of an electrical voltage that varies over time. These signals can be captured from natural sources—like a voice or instrument—or generated electronically. When we talk about audio signals, we’re often referring to their frequency, measured in Hertz (Hz). One Hertz represents one cycle per second of a wave, and the human ear typically perceives sounds between 20 Hz and 20,000 Hz.

Audio signals can be analog or digital. Analog signals are continuous and mimic the actual sound waves they represent, while digital signals sample these waves at discrete intervals, allowing computers and modern devices to process and store sound efficiently. Understanding the difference between these two forms is crucial for audio engineers, musicians, and hobbyists who work with sound reproduction.

The Role of Frequency in Audio

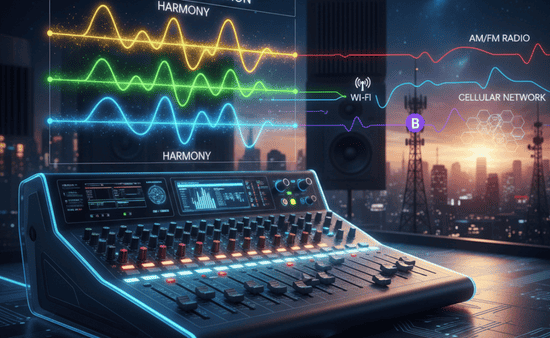

Frequency is a key factor in how we perceive sound. Low frequencies produce bass tones, mid-range frequencies convey most of the musical content, and high frequencies provide clarity and sparkle. When frequencies are combined in harmony, they create the rich textures we hear in music. This is why audio professionals carefully balance frequencies using tools like equalizers and mixers to craft a desired sonic experience.

Beyond music, frequency management is essential in radio and communication technologies. Radio frequencies (RF) transmit signals wirelessly over long distances. Different frequency bands are assigned for various uses—AM/FM radio, Wi-Fi, Bluetooth, and cellular networks all operate on specific ranges to avoid interference.

Waveforms: The Shape of Sound

Not all audio signals are created equal. The shape of the waveform determines the character of the sound. Common waveforms include:

- Sine waves: Smooth, pure tones often used for testing and synthesis.

- Square waves: Harsh, buzzy sounds found in electronic music and some alarm systems.

- Sawtooth waves: Rich in harmonics, ideal for synthesizers and certain musical effects.

- Triangle waves: Softer than square waves, providing a distinct tonal color.

Understanding waveforms helps sound designers, audio engineers, and musicians manipulate sound creatively and technically, whether for music production, broadcast, or experimental applications.

Audio Signal Technology

Audio signals are transmitted, amplified, and converted in various ways. For instance, in tube amplifiers, a push-pull output transformer is often used to enhance sound quality by efficiently transferring the signal from the tubes to the speakers while minimizing distortion. This technology highlights the intersection of physics and art in audio engineering, ensuring that the nuances of the original signal are faithfully reproduced.

Microphones, speakers, mixers, and amplifiers all rely on audio signals. Each component has its role: microphones convert sound waves into electrical signals, amplifiers increase signal strength, and speakers convert the signals back into audible sound. The quality of each step affects the fidelity of the final audio output.

The Future of Audio Signals

Modern advancements continue to expand the possibilities of audio. High-resolution digital audio, 3D spatial sound, and advanced streaming protocols allow listeners to experience music and communication in unprecedented ways. Meanwhile, RF technology keeps improving wireless transmission, ensuring clear signals for both consumer devices and professional applications.

Understanding audio signals is more than just technical knowledge—it’s about appreciating how sound interacts with technology and human perception. From the Hertz of a simple tone to the complex harmonics of an orchestral performance, audio signals connect science and art, shaping the way we listen, communicate, and enjoy our sonic world.

In Conclusion

From the invisible waves that carry radio broadcasts to the intricate frequencies that fill a concert hall, audio signals are the invisible threads weaving our auditory experiences. Whether you’re a music enthusiast, a sound engineer, or simply curious about how devices bring sound to life, exploring audio signals offers a deeper appreciation for the symphony of technology and physics behind every note and beat.